Machine learning research prepares Computer Science majors for competitive careers

During Student-Faculty Summer Research with Computer Science and Mathematics professors Ting Zhang and Paul Lin, six Computer Science majors used machine learning techniques to create computer models that work in ways similar to the human mind.

Aaliyah Winford and Yoann Olympio worked together, along with Pauline Tillotson, in Professor Paul Lin's lab to create a computer algorithm that could identify signs from American Sign Language in videos.

In the eight-week Student-Faculty Collaborative Summer Research Program, six McDaniel students designed computer models to classify videos of American Sign Language and a variety of actions. They used a process called machine learning — which happens all around us on a daily basis but often goes unseen — to create their models.

Machine learning is common in modern software and a sought-after skill set by employers in the field of computer science. It's a process in which a computer program is trained to recognize and respond to certain criteria. Ting Zhang, assistant professor of Computer Science and Mathematics, says machine learning techniques are designed to mimic the human brain in the way they operate.

With machine learning, computer models perform seemingly independently once they have the right parameters. Paul Lin, associate professor of Computer Science says, “The main difference between machine learning and traditional computation is the ability for the model to make decisions.”

“Machine learning is all around us.”

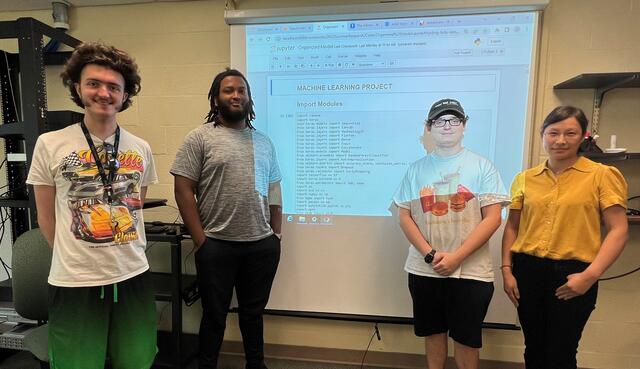

Students in Zhang’s lab studied “Using Convolutional Neural Networks for Action Recognition in Videos.” Computer Science majors Jamal Bourne, Shaun Bolten, and Steven Herd designed and trained machine learning models to recognize and classify 13,320 videos into 101 categories, each based on an action, like playing yo-yo.

“This research inspires students to learn what the emerging train of thought is in the computer science field. Nowadays, machine learning is very relevant,” says Zhang.

Using Jupyter Notebook, the students designed two models to learn both the spatial and temporal features of videos to conduct the action recognition for all 101 categories.

Herd, Bourne, Bolten, and Professor Zhang used a computer server in Lewis Hall to conduct their research.

The most challenging aspect of the project was accounting for the temporal elements of videos, says Zhang. To identify the actions occurring, the students computed the differences between the position of objects in one still frame of a video to the next. For example, in one frame the yo-yo is low, in the next, it’s high.

Connecting to a computing server in the basement of Lewis Hall of Science, the research students ran many iterations of their program to test the recognition accuracy. “It’s exciting to have a machine this powerful to work with,” says Herd, a Class of 2022 graduate from Herndon, Virginia. Herd, along with Bourne, was in Zhang’s machine learning course in spring 2022.

“I gained more experience with machine learning from this summer experience and extended the knowledge that I already have,” says Bourne, a senior from Reisterstown, Maryland. “Machine learning is all around us, in every aspect of every enterprise, so it’s a useful skill for me to have.”

For Bolten, a junior transfer student from Poolesville, Maryland, it was an entirely new experience. “Convolutional neural networks, which I had no idea existed, are a huge part of what we’re working with,” he says. “It’s amazing how quickly you can learn and how much there is to learn. It’s never boring. This was an incredible opportunity, and I’m really happy I was invited to do this project.”

“Connecting the Deaf and the hearing world together.”

Professor Lin with Winford, Tillotson, and Olympio.

Three students in Lin’s lab researched “Sign Language Recognition and Translation using Machine Learning,” which involved training a computer algorithm to identify signs from videos of American Sign Language (ASL).

Lin, who specializes in software engineering and computer architecture, was joined by Yoann Olympio, Pauline Tillotson, and Aaliyah Winford.

Early in the project, the group took the time to learn some ASL. Winford, a sophomore ASL and Computer Science major from White Plains, Maryland, was the only lab member with prior experience using the language. A First Year Seminar course had inspired Winford to enroll in the major and become an interpreter.

“I know the linguistics and how the language works, so we knew what the program needed to capture. For instance, palm orientation and facial expressions,” says Winford.

Lin earned his Ph.D. from the University of North Texas before joining McDaniel and has been doing summer student-faculty research for four years now. ASL, however, was a new subject for him that came with unique challenges.

“Because signing involves the process from the beginning to the end of a word or expression, the same gesture in a different direction could mean different things,” Lin says. “So, the students had to establish a model that can process the time and duration from the beginning to the end of the signing.”

The students provided their computer model with a variety of data in the form of ASL videos. They used a few subsets of data; one subset had 100 words with 40 to 50 videos per word, and another had 1,000 words with 11 to 25 videos per word.

Winford practices the sign for "Uncle" while Tillotson reviews the videos representing it.

The model uses a 3D convolutional neural network to process the data through layers of classification. According to Tillotson, a junior Computer Science major from Herndon, Virginia, “This involves a surprising amount of coding and a lot of error messages.”

Action recognition in videos is already difficult, especially for similar motions, explains Winford, and many ASL signs are similar. The signs for “idea” and “if” as well as “happy” and “scared” are easily confused by humans, let alone computer models. The model may also place too much importance on certain aspects of a video, like a person’s shirt color or facial hair.

Olympio of Germantown, Maryland, is interested in one day working with autonomous vehicles, so he values this experience in machine learning. A junior Physics major specializing in Engineering with a minor in Computer Science, Olympio says “this is something a lot of jobs are looking for, so it’s a great resume builder. I’ve learned so much and this is a step closer to my goals.”

Training a machine learning algorithm to identify ASL in videos has many potential real-world applications, like better automatic interpretations and new ways for people to learn ASL.

“The Deaf community could really use an advanced version of this project, because the amount of available human interpreters sometimes falls short,” says Winford. “This is a way of connecting the Deaf and the hearing world together to move toward better understanding one another.”

In addition to enjoying the nonlinear nature of summer research, the students have learned new skills, like extensive coding in Python. Tillotson has enjoyed the ability to explore new topics in machine learning, while also being “intensely focused” on the main project. “When something goes wrong, I can spend hours reading the documentation and exploring new techniques,” she says.

Winford, who plans to become a lawyer in the future, especially enjoyed “merging the two different worlds that I’ve been living in at McDaniel: the progression of the Deaf community and the advancement of my computer science knowledge.”